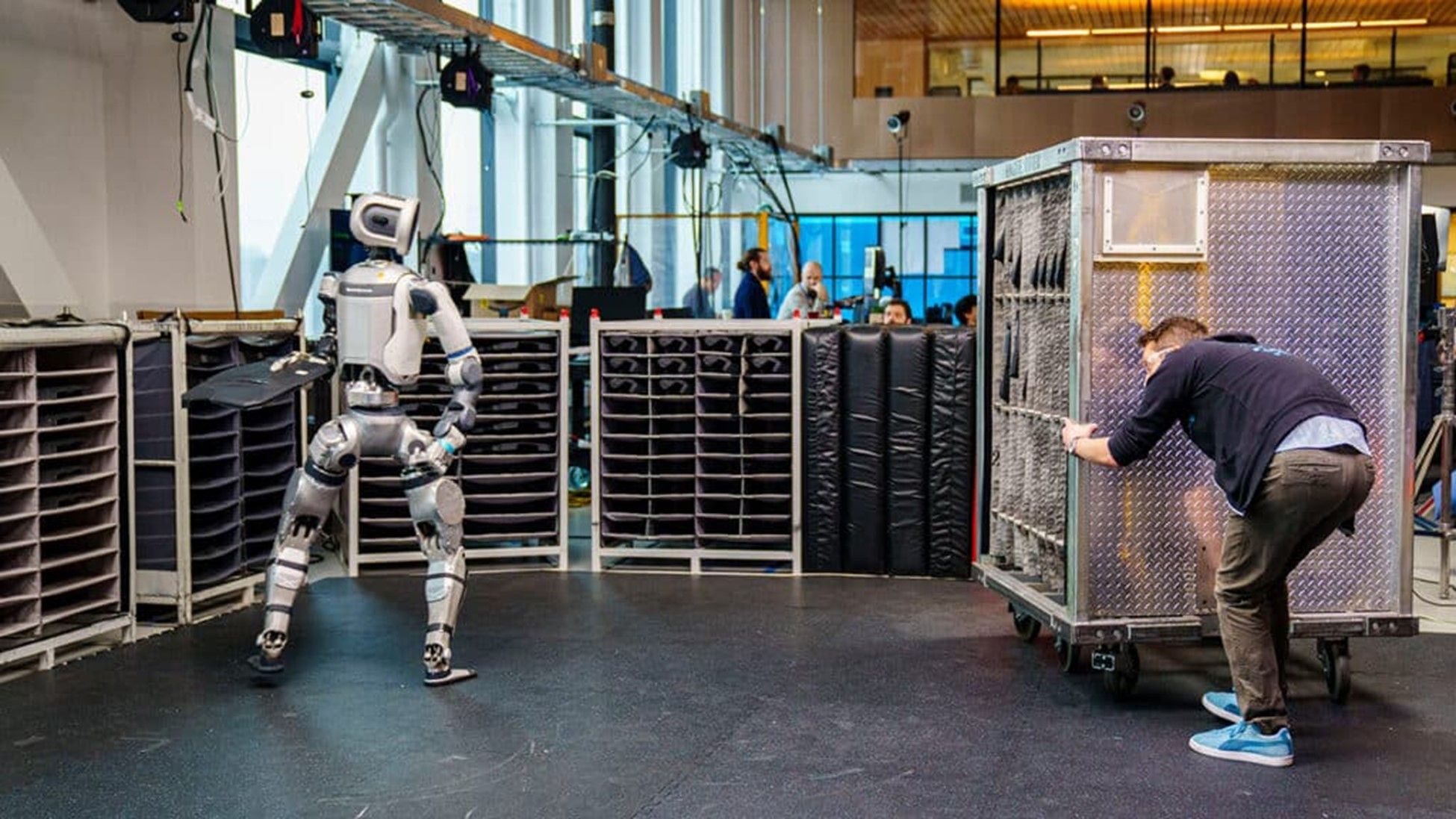

There are many ways robots see the world and Boston Dynamics’ Altas has its own unique of way to visualizing in order to do its function and tasks without fail. Here’s how this unique kind of robot vision systems see the world.

Altas’s Perception System and its Core Components

Atlas has two kinds of awareness features: 2D and 3D. It also has object post estimation called SuperTracker as well as its Hand-Eye Calibration. We will be breaking down everything to you below:

The 2D awareness detects what objects or fixtures are in few and classify them like shelves, bins, and so on. It also uses bounding boxes and two types of keypoints: outer (like the corners of a fixture) and inner (like dividers and slots within the fixtures.)

Atlas’s 3D awareness infers the orientation (or pose) and position of the fixtures that are related to it. Like the 2D awareness feature, it also uses keypoints with an addition of odometry data (how the robot moves) in order to align what it sees with its internal model. In the case of the handling occlusion, it some of the keypoints may be hidden or out of view. Which is why the system will make use of the inner keypoints. These are more numerous and relevant for interaction. Even when the out keypoints are missing.

Those with identical fixtures however is sort of a unique challenge. For this case, Altas will use temporal coherence priors to figure them apart.

The SuperTracker

Atlas has a fusion of several data streams of what the robot’s joint sensors tell it, and force info when it is needed. In the case if the object is visible, it uses a render-and-compare method. What’s that, you ask? Well this method involves of giving a candidate pose, render a CAD model, compare it with a camera image, and lastly refine the pose.

For objects that are occluded or have limited visibility, Atlas uses post hypotheses and some filters like kinematic constraints, consistency checks, and more.

Hand-Eye Calibration

The robot’s hand-eye calibration is very important for precise manipulation. This ensures that Atlas thinks its limbs, camera, or its body are doing matches what the sensors see. Calibration accounts for assembly or manufacturing tolerances. As well as physical deformation (such as temperature changes or repetitive impacts) and aging.

Atlas’ Future and Continuous Development

Like many robots related in the market today that uses AI, Atlas’ is not the perfect visual robot. The company is still continuing its development, closing the gap between perception and actuation so misplacements of objects are corrected automatically.

Currently, it’s moving towards a “foundation vision model,” which is shared and general models that tie together perception and action, geometry, semantics, and physics. The goal is not just to perceive, but also to act in an intelligent and unified way.

Lastly, there is also a need for agility and adaptability. Which means more, more failure modes, and more environmental variation.