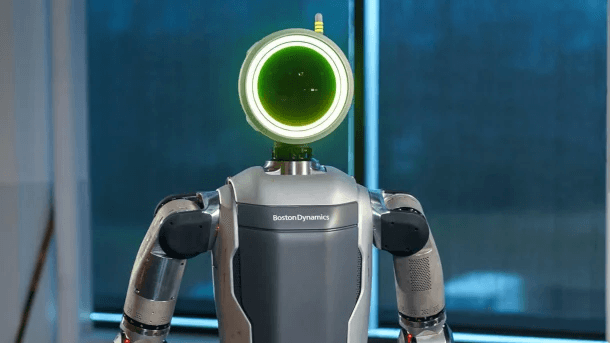

In the world of Large Behavior Models (LBM)s and humanoid robots, Atlas is getting more development this time, as Boston Dynamics has teamed up with Toyota Research Institute to enable more general and long-horizon manipulation and locomotion behaviors with the robot.

In case you are not aware, LBMs are language-condition policies. Which means that related humanoid robots like Atlas can interpret high-level instructions as well as execute complex tasks given to it. Using its vision, whole-body control, proprioception, and relative behavior, it’s expected that there’s more to come when it comes to Atlas. Without further ado, here’s some updates that you need know so far.

Atlas’ Key Features and Capabilities

In the latest update shared by Boston Dynamics, Atlas can now do whole-body coordination. We are talking about all aspects of dynamic and mobile manipulation such as shifting its center mass, squatting, and avoiding self-collisions.

It can also handle language-conditioned multi-task policies. These policies accept proprioceptive state and image inputs. Aside from this, it also uses a language prompt to define the task. This same policy also works across different embodiments and environments.

It can now also do multiple subtasks in sequence. It can do things like clearing bins, moving through the environment, placing, regrasping, and folding parts under one single language instruction.

When it comes to its robustness and reactivity, some policies are trained to perturbations like when lids close, when object fall, or when there are unexpected obstacles that might occur. Its system learns from examples of failure and disturbances, then it quickly without the need for human manual re-programming. With all this, it does look and feel that the Atlas its own AI brain to help analyze things in order to do their tasks better the next time.

Currently, the trained models allow for execution speed adjustments at inference time with minimal performance degradation in many tasks.

How Atlas Does It

Atlas does its data collection via teleoperation. With the use of a real robot and simulation, as well as the usage of VR-based interfaces, it makes sure that teleoperators can do very fine dexterous tasks, as well as full body motion.

There is also a training infrastructure in place with a use of a diffusion transformer model. They also use simulation to accelerate pioneering experiments, test policy changes, debug, benchmark, and then finally deploy to hardware to be tested for real-word validation.

Future Steps Being Made

With everything mentioned above, we will also be sharing of what Boston Dynamics is doing for the improvement of Atlas’ future.

As of this writing, it’s currently in the process of needing more data diversity. Which means more environments, new tasks, and varied embodiments that Atlas need to be exposed of. There is also a need to expand its capability in tactile and forced feedback. Making it more dynamic and fast manipulation.

The company is also in the process of improving Atlas’ reasoning and its open-ended task generalization. Giving it more complex instructions, as well more autonomy in choosing substeps.

So that’s about it so far. We will be more on the look out to what Boston Dynamics has in store for us when it comes to Atlas and for the future of visual robots.