December 23, 2024

MIT Researchers Teach Robots to Think Before They Act Through New Simulation Method

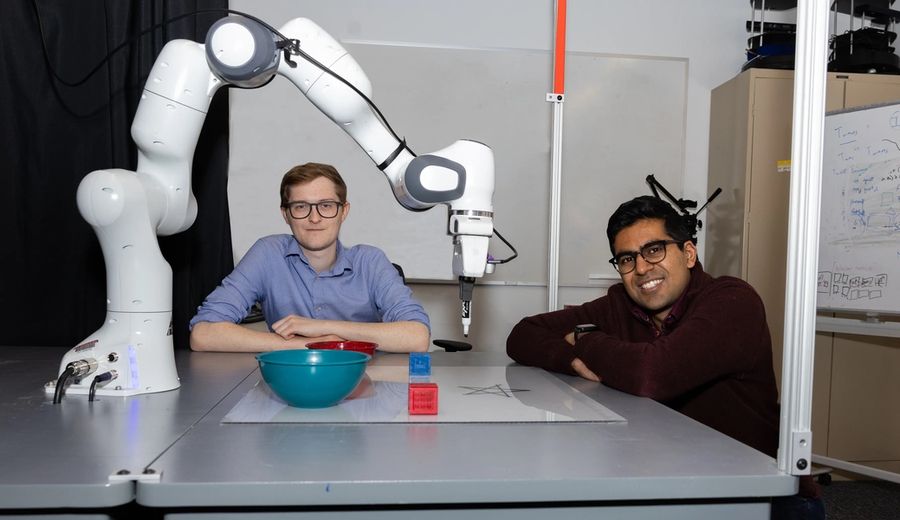

Researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed an innovative method to enable robots to safely tackle open-ended tasks. Named "Planning for Robots via Code for Continuous Constraint Satisfaction" (PRoC3S), this approach combines large language models (LLMs) with simulations to help robots understand their limitations and effectively carry out complex chores.

The challenge arises when robots are asked to perform tasks without considering their physical constraints, such as reachability and obstacles. While LLMs can generate plans based on textual data, they often miss the contextual awareness needed for safe execution. This gap can lead to unrealistic plans that may require human intervention to rectify.

To overcome this issue, the CSAIL team created PRoC3S, which integrates vision models to simulate the robot's environment. This allows the robot to account for its physical limitations and test its strategies in a virtual setting. The process involves iterative trial and error: the robot formulates a plan, tests it in simulation, and refines it until a safe and feasible sequence of actions is achieved.

PRoC3S begins with an LLM pre-trained on internet text to draft initial plans. Before executing a task, researchers provide the model with a sample task that includes a description, a long-horizon plan, and environmental constraints. For instance, to teach a robot how to draw a star, it first learns by drawing simpler shapes like squares.

The real breakthrough comes during the simulation phase. The robot tests its plan digitally, ensuring each step adheres to all constraints. If a plan fails, the LLM generates a new one until success is reached. This method guarantees that robots can perform tasks safely in real-world scenarios.

In simulations, PRoC3S has shown remarkable results, achieving an 80% success rate in drawing stars and letters, stacking digital blocks accurately, and placing items like fruits on plates. The method consistently outperformed similar approaches such as "LLM3" and "Code as Policies."

The researchers then applied PRoC3S in real-world settings. A robotic arm successfully arranged blocks in straight lines, sorted colored blocks into bowls, and positioned objects at the center of a table. These accomplishments demonstrate PRoC3S's potential for enabling robots to perform complex tasks in dynamic environments like homes.

“LLMs alone or traditional robotics systems cannot handle these tasks independently,” said Nishanth Kumar, a PhD student and co-lead author of the study. “However, their synergy makes it possible to solve open-ended problems.”

He emphasized how vision models create realistic digital environments that allow robots to reason about feasible actions throughout long-horizon plans.

Looking ahead, the CSAIL team aims to enhance PRoC3S by integrating advanced physics simulators and scalable data-search techniques. They also plan to extend its application to mobile robots like quadrupeds for tasks such as walking and environmental scanning. The ultimate goal is to develop home robots capable of reliably handling general requests like "bring me some chips" by testing action plans in simulated settings.

Eric Rosen from The AI Institute praised the approach for its potential to improve robotic safety and reliability.

“PRoC3S addresses the challenges of using foundation models by combining high-level task guidance with AI techniques that ensure safe actions,” he noted.

Supported by organizations like the National Science Foundation and the Air Force Office of Scientific Research, PRoC3S represents a significant advancement in robotics. By merging high-level reasoning from LLMs with detailed simulations, MIT researchers are paving the way for robots that can safely and effectively perform complex tasks in various environments.